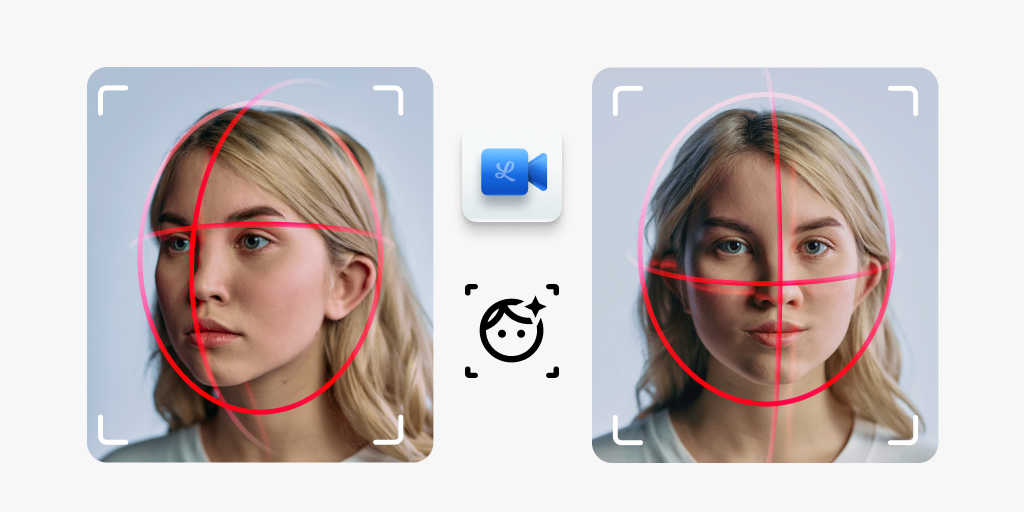

Artificial Intelligence (AI) is becoming more and more mainstream. As this technology grows into people’s everyday lives, it is important to understand how you can use it in your video applications. One impactful application is utilizing AI for real-time face detection in video streams, offering myriad features for your application. It can be used for security purposes by identifying the number of people in a single stream or providing an additional data point on the emotions an individual is expressing during a session. Understanding these aspects of your video sessions could provide powerful insight into your business.

In this tutorial, we'll demonstrate how to leverage a third-party library to analyze local media, detect a single face, and stream the marked-up video over an SFU connection.

Let's start by creating several helper functions to manage LiveSwitch initialization steps. First, we need a helper to instantiate a Client object.

let createClient = () => {

return new fm.liveswitch.Client(gateway, appId, username, "chrome-js-mac");

};

Next, we create a helper to generate a token.

let getToken = (client, claims) => {

return fm.liveswitch.Token.generateClientRegisterToken(

appId,

client.getUserId(),

client.getDeviceId(),

client.getId(),

null,

claims,

secret

);

};

The final LiveSwitch helper handles creating the SFU upstream connection.

let startSfuConnection = (channel, lm) => {

writeStatus("Opening SFU connection");

let audioStream = new fm.liveswitch.AudioStream(lm);

let videoStream = new fm.liveswitch.VideoStream(lm);

let connection = channel.createSfuUpstreamConnection(

audioStream,

videoStream

);

connection.open();

writeStatus("Starting SFU Connection");

return connection;

};

Additionally, we'll add another helper to display informative messages on the screen.

let writeStatus = function (message) {

let sdiv = document.getElementById("status-div");

let p = document.createElement("p");

p.innerHTML = message;

sdiv.appendChild(p);

};

Now, we incorporate a third-party library for face detection:

await faceapi.loadTinyFaceDetectorModel(

"https://justadudewhohacks.github.io/face-api.js/models"

);

await faceapi.loadFaceLandmarkTinyModel(

"https://justadudewhohacks.github.io/face-api.js/models"

);

await faceapi.loadFaceExpressionModel(

"https://justadudewhohacks.github.io/face-api.js/models"

);

Following that, we use a local media stream from our camera as the initial video feed, displaying it on the screen. This step is essential, regardless of the chosen third-party library.

try{

// ...load third party face detection library

stream = await navigator.mediaDevices.getUserMedia({

audio: false,

video: { width: 640,height: 480 }

});

} catch (ex) {

writeStatus("ERROR: Getting local stream.");

}

// set the source of the video dom element to the local stream

video.srcObject = stream;

Once the local media stream is defined, we need to implement logic to react to the stream loading with metadata before we analyze it.

We play the video, create a new canvas object that mirrors the video, and then draw facial recognition markup lines.

video.play();

// use the faceAPI to create a new canvas object

const canvas = faceapi.createCanvasFromMedia(video);

// configure it to be 2 dimensional

const ctx = canvas.getContext("2d");

// add the new canvas object to the DOM

document.getElementById("canvasContainer").appendChild(canvas);

// set the size of the canvas

canvas.width = 640;

canvas.height = 480;

const displaySize = { width: 640, height: 480 };

const minProbability = 0.05;

// tell the FaceAPI what the dimensions of the video are

faceapi.matchDimensions(canvas, displaySize);Next, we establish rules for face detection, creating a function that runs every millisecond to inspect the video element, perform face detection, and draw results on the canvas object. Note that the steps may vary for different libraries or if face expression detection is unnecessary.

setInterval(async () => {

// do not draw if we have set stop to true

if (stop) {

return;

}

// make sure we dont run this logic if a previous iteration is still running

stop = true;

// initialize drawing options

const options = new faceapi.TinyFaceDetectorOptions({

inputSize: 128,

scoreThreshold: 0.3

});

// call API to detect a single face with landmarks and track expressions

let result = await faceapi

.detectSingleFace(video, options)

.withFaceLandmarks(true)

.withFaceExpressions();

// if the API was able to run, we now need to draw it

if (result) {

// clear previous results

ctx.clearRect(0, 0, 640, 480);

// get updated values from the API run

const resizedDetections = faceapi.resizeResults(result, displaySize);

// draw new reults to the canvas

ctx.drawImage(video, 0, 0, 640, 480);

// draw detection infomation

faceapi.draw.drawDetections(canvas, resizedDetections);

// draw the landmarks into the canvas

faceapi.draw.drawFaceLandmarks(canvas, resizedDetections);

// draw the face expressions

faceapi.draw.drawFaceExpressions(canvas, resizedDetections);

}

// since we are done drawing, disable circuit breaker

stop = false;

// run every millisecond

}, 1);

We then take the canvas object and display it on the screen alongside the live video for demonstration purposes:

let st = canvas.captureStream(60);

Finally, we take the modified video feed and push it out to others in our SFU upstream connection using the previously defined helper functions:

// initialize client

let client = createClient();

// create a client token

let token = getToken(client, claims);

writeStatus("Starting Local Media");

// create a local media object using the canvas stream

var localMedia = new fm.liveswitch.LocalMedia(false, st);

// add face detected media to the SFU upstream

localMedia

// start the media

.start()

.then((lm) => {

// register the client using your token

client

.register(token)

.then((channels) => {

// Client registered.

writeStatus("Connected to Server.");

// fetch the channel you connected to

let channel = channels[0];

// Creates the new SFU Upstream Connection using your canvas stream

let sfuConnection = startSfuConnection(channel, localMedia);

})

.fail((ex) => {

writeStatus("ERROR: " + ex);

});

})

.fail((ex) => {

writeStatus("ERROR: " + ex);

});And there you have it! You are now streaming a modified video feed of yourself with face detection markup. You can then alert other users based on this, using the expressions to determine sentiment analysis or do many other things with it.

Please try out this example in our CodePen here and integrate this into your own application after signing up for a free LiveSwitch trial.

Need assistance in architecting the perfect WebRTC application? Let our team help out! Get in touch with us today!