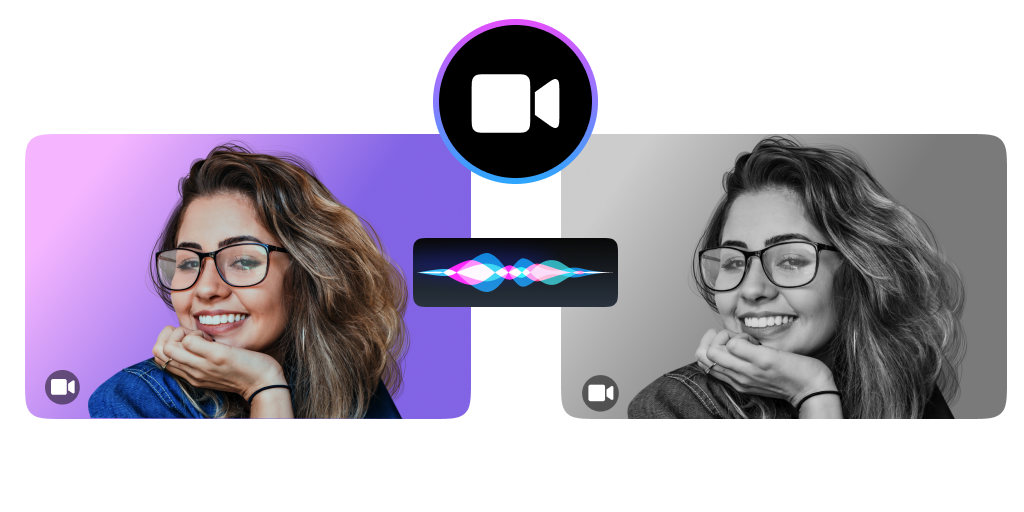

WebRTC Streams before Encoder: Transform to Black and White

In this blog post, I will be walking you through how to transform your local video before sending it. LiveSwitch’s SDK gives you access to perform video transformations at a number of steps in the life cycle. This article will focus on doing it at the earliest step. Any transformation can be done, but we will be transforming the color to black and white in this example. We are expecting a YUV format and will be filtering out the UV components of that feed.

To begin, we will first need to get access to the video stream.

let stream = await navigator.mediaDevices.getUserMedia(constraints);

The stream represents a constant flowing source of data from the User Media devices. Our next step is to get the video track and create our new video processor resources.

// create proccessor object that will handle frame changes

const trackProcessor = new MediaStreamTrackProcessor({ track: videoTrack });

// create generator which composes frames back into a stream

const trackGenerator = new MediaStreamTrackGenerator({ kind: "video" });

The track processor is given access to the local media’s video track and will be responsible for creating the pipeline that will allow us to transform and recreate a new stream. The TrackGenerator is responsible for taking tracks one at a time and inserting them into a stream.

Next, we want to define our transformer. This is the logic that you should change depending on what your desired outcome is. The transformer will operate on a single frame at a time.

const transformer = new TransformStream({

async transform(videoFrame, controller) {

if (videoFrame.format === "NV12") {

// create a buffer to store the frame data

let buffer = new Uint8Array(videoFrame.allocationSize());

// copy the frame to the buffer

let layout = await videoFrame.copyTo(buffer);

// Remove the UV data (makes it green.);

for (var i = videoFrame.codedWidth * videoFrame.codedHeight, l = buffer.length; i < l; i++ ) {

buffer[i] = 0;

}

// generate a new frame using the contents of the buffer and the settings from the original frame

let greyFrame = new VideoFrame(buffer, {

format: videoFrame.format,

codedWidth: videoFrame.codedWidth,

codedHeight: videoFrame.codedHeight,

timestamp: videoFrame.timestamp,

colorSpace: videoFrame.colorSpace

});

// close the original frame since it is no longer needed

videoFrame.close();

// add the newly transformed frame to the queue for the stream

controller.enqueue(greyFrame);

} else {

console.error("I didn't handle this color format.");

}

}

});

Now that we have defined our transformation logic and have our pipeline resources defined, we need to create our processing pipeline.

trackProcessor.readable

.pipeThrough(transformer)

.pipeTo(trackGenerator.writable);

Using our processor, we want to pass the tracks through our transformer and then pass the transformed frames into our track generator. We then need to create a new MediaStream object which uses the stream from our generator and use this new stream in our LocalMedia object.

const streamAfter = new MediaStream([trackGenerator]);

let localMedia = new fm.liveswitch.LocalMedia();

localMedia._internal._setVideoMediaStream(streamAfter);

localMedia._internal.setState(fm.liveswitch.LocalMediaState.Started);

We now have our fully transformed local video track! We should now add this to our layout so we can see the same local video that others in our meeting will also see.

let layoutManager = new fm.liveswitch.DomLayoutManager(player);

layoutManager.setLocalView(localMedia.getView());

And finally, we should connect to the meeting and begin sending our transformed video out for others to view.

let client = new fm.liveswitch.Client(gateway, app);

let claims = [];

let claim = new fm.liveswitch.ChannelClaim(channelId);

claims.push(claim);

let token = fm.liveswitch.Token.generateClientRegisterToken(

client, claims,ss);

let channels = await client.register(token);

let channel = channels[0];

let videoStream = new fm.liveswitch.VideoStream(localMedia);

let connection = channel.createSfuUpstreamConnection(

videoStream, "camera-0");

connection.open();As I mentioned before, this pattern can be used to apply any transformation to your local video; however, you should be aware of how much additional processing you are doing in this transformation. Since this is happening on the local device, you should test your transformations performance on the oldest devices you plan on supporting. A transformation that works flawlessly on an iPhone 15 Pro may cause the phone to overheat and shut down in minutes on an older iPhone SE.

Stay tuned for some additional articles on how to transform your media using the power of the LiveSwitch SDK.

Need assistance in architecting the perfect WebRTC application? Let our team help out! Get in touch with us today!